DeepSeek Chat RAG

DeepSeek Chat RAG is a document-based Q&A system using Retrieval-Augmented Generation (RAG) and Groq’s LLM for efficient information retrieval.

Installation

Installing for Claude Desktop

Manual Configuration Required

This MCP server requires manual configuration. Run the command below to open your configuration file:

npx mcpbar@latest edit -c claudeThis will open your configuration file where you can add the DeepSeek Chat RAG MCP server manually.

DeepSeek RAG Chatbot 🤖

An intelligent chatbot powered by Groq, LangChain, and ChromaDB to chat with your documents.

🌟 Introduction

DeepSeek RAG Chatbot is a powerful and intuitive application that allows you to have conversations with your own documents. By leveraging the speed of the Groq LPU Inference Engine and the versatility of LangChain, this tool transforms your static files (PDFs, DOCX, TXT, CSV) into an interactive knowledge base.

Simply upload your documents, and the system will automatically process, index, and prepare them for your questions. The user-friendly interface, built with Streamlit, makes it easy for anyone to get instant, accurate answers drawn directly from the provided content.

✨ Key Features

- Multi-Format Document Support: Upload and process various file types, including

.pdf,.docx,.txt, and.csv. - High-Speed Inferencing: Powered by Groq, delivering responses at exceptional speed for a fluid, real-time conversational experience.

- Advanced RAG Pipeline: Utilizes LangChain for robust Retrieval-Augmented Generation, ensuring answers are relevant and contextually accurate.

- Efficient Vector Storage: Employs ChromaDB to create and manage a persistent vector database of your document embeddings for fast retrieval.

- User-Friendly Interface: A clean and simple web UI built with Streamlit that includes real-time processing feedback and chat history.

- Open Source & Customizable: Fully open-source, allowing for easy customization and integration into other projects.

⚙️ How It Works

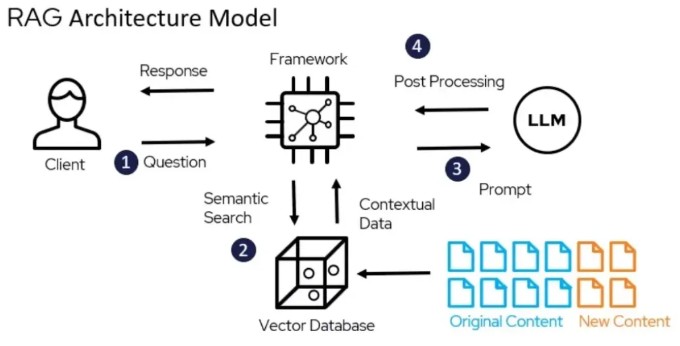

The application follows a sophisticated Retrieval-Augmented Generation (RAG) architecture to provide answers from your documents.

- Document Loading: You upload your documents (PDF, DOCX, etc.) through the Streamlit interface.

- Text Splitting & Embedding: The system loads the documents, splits them into smaller, manageable chunks, and generates vector embeddings for each chunk.

- Vector Indexing: These embeddings are stored in a ChromaDB vectorstore, creating a searchable index of your document's knowledge.

- User Query: You ask a question in the chat interface.

- Context Retrieval: The system takes your query, embeds it, and performs a similarity search in ChromaDB to retrieve the most relevant document chunks (the "context").

- Response Generation: The retrieved context and your original query are passed to the Groq-powered language model, which generates a human-like, accurate answer based on the provided information.

🚀 Getting Started

Follow these steps to set up and run the project on your local machine.

Prerequisites

- Python 3.8+

- A Groq API Key. You can get one for free at GroqCloud.

1. Clone the Repository

git clone [https://github.com/samaraxmmar/Deepseek_chat_rag.git](https://github.com/samaraxmmar/Deepseek_chat_rag.git)

cd Deepseek_chat_rag

Stars

1Forks

0Last commit

5 months agoRepository age

9 months

Auto-fetched from GitHub .

MCP servers similar to DeepSeek Chat RAG:

Stars

Forks

Last commit

Stars

Forks

Last commit

Stars

Forks

Last commit